Incident Report: IMAP/POP Brute-Force Attack March 29, 2022

Summary

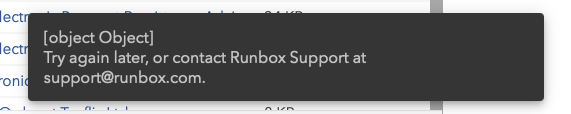

The incident on March 29 affecting our IMAP/POP service caused interruptions for a large number of Runbox accounts between approximately 11 and 23 CEST. Extensive investigations made during and after the incident revealed that the interruptions were caused by brute-force login attempts against the Runbox Dovecot IMAP/POP servers from a number of IP addresses. This conclusion was difficult to reach because it was camouflaged by consequential errors on Dovecot proxy servers and authentication related issues.

Record of events and mitigation efforts

A thorough review of our records and server logs indicate that the login attempts on our Dovecot proxy servers gradually increased from a normal level at 11 CEST by approximately:

135% between 12 and 13 CEST,

209% between 13 and 14 CEST,

308% between 14 and 15 CEST.

At this time our system administration team at Copyleft Solutions were alerted to IMAP/POP connection issues, ranging from slow connections to no connections at all. They proceeded to reboot the Dovecot servers and then the Dovecot proxy servers, which lead to consequential authentication and proxying issues for a short while. Subsequently an increasing number of successful connections was recorded, but the login problems mainly prevailed.

Further investigations revealed what appeared to be a targeted brute-force attack on our IMAP service that effectively denied legitimate connections from a significant number of users. Once a brute-force attack was ascertained, further analysis of server logs found a significant number of IP addresses reached our Dovecot services despite our automatic authentication based brute-force prevention systems.

Our system administration team subsequently blocked the most frequent IP addresses by adding them to the central firewall. This appeared to alleviate the situation as the login volume dropped to 246% between 17 and 18 CEST. There were however still connection timeouts to the Dovecot servers, and Runbox staff was alerted and a cooperative effort was initiated. Because staff routinely operate from different geographic locations to cover all time zones, and some at this time had varying cell phone coverage, this additionally delayed our response.

Investigations and attack mitigation continued and additional blocking of IP addresses was attempted in the firewall on the Runbox gateway servers. However, the attack volume thereafter increased to approximately 327% between 18 and 19 CEST and was sustained at approximately the same level for the next few hours.

The mail authentication service on the Dovecot proxy servers continued to experience issues, and investigations continued to determine whether these problems were caused by brute-force attacks themselves or by cascading issues in the infrastructure.

By 21 CEST the attack volume started decreasing to approximately 292% and subsequently to:

269% between 22 and 23 CEST,

200% between 23 and 24 CEST,

74% between 00 and 01 CEST the next day.

Around midnight Dovecot services were once again restarted and the situation then normalized, after which the login volume returned to a level similar to prior to the incident.

The incident was an isolated, however serious, event which calls for improvements on the handling of and response to such situations. Our company is in a sound position while growing and thriving, which means that we are in a position to facilitate necessary improvements.

Adjustments to monitoring and alerts

Based on our experiences from this incident we are initiating adjustments and improvements to our service monitoring and alert instrumentation.

Level of monitoring

Our team has been determined to increase service monitoring to a level sufficient to gain a complete and comprehensive overview of their availability and reliability, putting us in a position where scaling and improvements can be implemented ahead of time. As our infrastructure has grown in size and complexity we currently uses a combination of Munin, Nagios, and Check_MK for this purpose.

However, especially in periods with high traffic these can in combination produce an excessive number of alerts daily which is challenging for our staff to effectively process. We are now working to converge on just one or two systems resulting in a smaller consolidated set of alerts to manage.

It can also be challenging to distinguish between real and false alarms, and we have therefore initiated a review of our system’s monitoring thresholds together with our system administration team.

Alert instrumentation

We additionally utilize the external NodePing service availability monitoring, which checks whether registered servers are responding to requests from various geographic locations. If a Runbox service or server fails to respond within a given interval and number of rechecks, NodePing sends alerts via email, to our IRC server, and as text (SMS) messages.

These checks have also become a double-edged sword by being quite sensitive and in some cases identified short timeouts caused by servers briefly being in wait state. These may not be noticeable to end users and have in some instances drowned out alerts about actual outages.

In order to retain the information provided by these sensitive checks that help us identify and resolve intermittent IMAP timeout issues, we have added a new set of NodePing alerts with a higher interval and recheck number to attempt to alert Runbox staff via SMS about outages. Depending on the results from these changes we’re additionally considering apps such as Pushover to ensure that our staff becomes aware of the problem.

Longer term we are considering adding individual monitoring of additional internal services in order to get a clearer picture of arising issues and ideally before they become actual problems. These measures imply considerations regarding the interaction between Runbox staff and our system administration team at Copyleft Solutions.

Improvements to communication

We regret that our communication outwards to our customers during this incident fell short of what is adequate, and on a level that matched the seriousness of the situation.

Although we have already established communication lines for incidents, we are now expanding these with improved communication procedures for future incidents to ensure our teams are updating each other frequently. This in turn will allow us to update our customers better and more frequently as well, both via our own status page, our community forum, and on social media.

We are also establishing a new escalation procedure using several different communication channels with increasing alarm levels, integrating email, IRC, and various smartphone apps in addition to text messages and phone calls.

Again, we thank you for your continued support while we implement these improvements.

– Geir